How Banks Can Combat Generative AI-Enabled Fraud and Deepfakes

Generative AI (GenAI) is revolutionizing industries by creating highly realistic content, including images, text, and even synthetic data. As these technologies advance, however, they are also becoming tools for malicious actors who use them to perpetrate sophisticated financial frauds. Criminals can now exploit GenAI to generate hyper-realistic deepfakes, falsify documents, and create synthetic media, leading to an increase in financial fraud, particularly in the banking sector.

In this blog, we explore how financial institutions can protect themselves from these new types of fraud enabled by GenAI and take steps to safeguard their operations.

Types of GenAI-Enabled Financial Frauds

As GenAI technology evolves, it provides criminals with new avenues to commit fraud, forcing financial institutions to constantly update their defenses. Some of the most prominent forms of fraud driven by GenAI include:

- Deepfakes

Generative AI can produce hyper-realistic videos and images, enabling criminals to impersonate executives or other influential figures in financial institutions. These deepfakes can be used to manipulate employees into authorizing large financial transfers or to create fake investment schemes.

A notable example occurred earlier in 2024 when a finance employee at a major UK multinational was tricked into transferring $25 million after a deepfake video call mimicked the company’s chief financial officer.

- KYC Impersonation

Generative AI can also be used to bypass Know Your Customer (KYC) protocols, especially video-based verification methods. Criminals can generate fake but convincing identity documents or facial features, allowing them to impersonate legitimate individuals and gain unauthorized access to financial services.

Deloitte’s 2024 report highlights that GenAI could increase fraud losses in the U.S. from $12.3 billion in 2023 to $40 billion by 2027, with KYC fraud being a significant contributor.

- Voice Spoofing

Voice cloning technology powered by GenAI allows fraudsters to replicate a person’s voice, complete with specific accents, intonations, and emotional cues. These cloned voices are used to trick individuals into authorizing transactions or providing sensitive information.

A recent case in Newfoundland saw fraudsters using voice cloning technology to scam senior citizens out of $200,000 by impersonating family members in distress.

- Synthetic Identity Fraud

Synthetic identities are created by combining real and fabricated personal information, allowing criminals to open bank accounts or apply for credit under false pretenses. With the capabilities of GenAI, producing synthetic IDs has become easier and more realistic, making it harder for financial institutions to detect fraud.

GenAI can generate fake IDs, such as a driver’s license, using real people’s photos found online. This method of fraud is becoming increasingly difficult for banks to combat, especially when the synthetic identities are very convincing.

- AI-Generated Phishing Attacks

Criminals are using GenAI to create highly convincing phishing emails that appear to come from trusted sources. These spear phishing attacks are more effective due to AI’s ability to personalize messages based on data obtained from social media profiles, data breaches, and other sources.

Reports indicate that AI-generated phishing emails have a higher click-through rate than those crafted by humans, making it easier for criminals to deceive their targets.

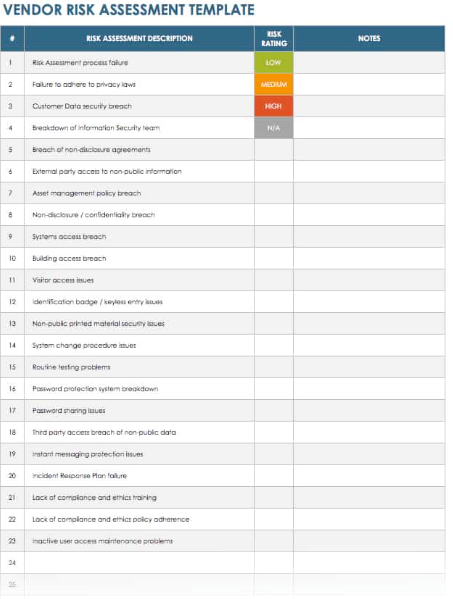

Strategies to Combat GenAI-Enabled Financial Frauds

As GenAI-driven threats become more prevalent, financial institutions must adopt advanced tools and strategies to detect and prevent these types of fraud. Below are some key tactics that can help banks stay ahead:

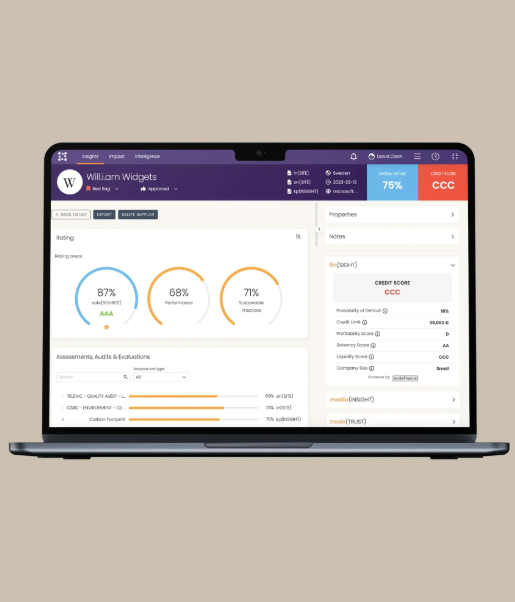

- Leverage AI to Detect Fraud

Banks can fight back against AI-driven fraud by using AI themselves. By implementing machine learning models trained on both real and synthetic data, financial institutions can detect deepfakes and other fraudulent content. AI algorithms can identify subtle discrepancies between real and synthetic media that may not be visible to the human eye, helping to stop fraud before it escalates.

However, this is an ongoing battle. As AI systems improve, so do the methods used by criminals, making it a continuous cycle of improvement and adaptation.

- Implement Multi-Factor and Biometric Authentication

Using multi-factor authentication (MFA) along with biometric verification (like facial recognition or fingerprint scanning) can significantly reduce the risk of fraud. These measures make it much harder for fraudsters to bypass identification protocols, even with deepfake technology.

Additionally, banks can enhance deepfake detection by deploying systems that assign a “confidence score” to synthetic audio or video, prompting further verification if necessary.

- Utilize Behavioral Analytics

Behavioral analytics can help identify fraudulent activities by analyzing patterns in customer behavior. By tracking unusual actions, such as high-value transactions or sudden changes in login locations, banks can flag potential fraud in real time.

For example, if a customer in New York makes a grocery purchase and then, just minutes later, buys luxury goods in Tokyo, this unusual behavior can raise a red flag for fraud detection systems.

- Collaborate with Industry Partners and Third Parties

Collaboration with trusted third-party vendors and industry partners can help banks build robust defenses against emerging threats. By sharing data and insights on GenAI-enabled fraud, financial institutions can quickly identify new fraud tactics and improve their defenses.

Partnering with fintech firms and other financial institutions also enables the pooling of resources and expertise, providing a collective defense against AI-powered fraud.

- Educate Customers

One of the most effective ways to combat GenAI-driven fraud is to educate customers about the risks. By using various communication channels—such as push notifications, emails, and social media—banks can inform customers about AI-enabled scams like voice spoofing or phishing attacks.

For example, a bank could send out a notification warning customers about the dangers of AI-generated phishing emails and provide tips on recognizing them. Banks could also host webinars to educate customers on how to spot AI-based fraud and protect themselves.

- Invest in Continuous Training and Development

To stay ahead of evolving fraud tactics, banks must invest in continuous employee training. Ensuring that staff are well-versed in the latest fraud detection methods, including recognizing deepfakes and synthetic identities, is essential to responding quickly and effectively to new threats.

Digital learning platforms can be used to upskill employees on the latest fraud prevention technologies and provide hands-on experience in detecting and addressing AI-powered fraud.

Conclusion

As generative AI continues to evolve, financial institutions must remain proactive in defending against increasingly sophisticated fraud techniques like deepfakes, synthetic identities, and AI-generated phishing. By leveraging AI-powered fraud detection systems, adopting multi-layered authentication protocols, collaborating with industry partners, and educating customers, banks can enhance their defenses and reduce the risk of financial fraud.

Staying vigilant, adapting to emerging threats, and investing in continuous learning will be key to successfully combating GenAI-driven fraud in the financial sector.

English

English